Designing Astradot's Cloud Platform, Part 2: Terraform

In our previous blog post, we delved into our AWS architecture. Today, we will discuss the challenges we encountered while fully adopting Infrastructure-as-Code. In this post, we will outline some of the issues we faced with our Terraform setup, including enhancing developer efficiency, improving runtime performance, ensuring security, and reducing costs.

We chose HashiCorp's Terraform Cloud as our Terraform platform. On Terraform Cloud, parallelism and self-hosted runner agents are expensive. Therefore, our Terraform architecture had to take those factors into account to reduce costs.

Module Management

As we use a monorepo for the entire company, all the Terraform code for every AWS account was put in the same repository. Every AWS account was mapped to a separate workspace in Terraform Cloud.

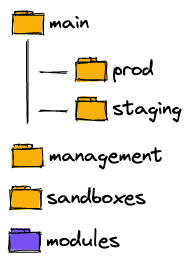

Initially, our folder structure was organized by AWS Organizational Units, with each AWS account mapped to a separate folder. We employed a separate 'modules' folder to store all the modules used by any AWS account. Terraform Cloud's excellent monorepo support made this structure effective for us.

Adopting the Bazel philosophy of 'one version only,' our modules are unversioned, with only a single version of a module ever in use. Under this structure, changes to a module shared between staging and production could trigger a Terraform plan in both. In this case, we would first 'apply' the change in staging. If it is successful, we would apply it in production too. We try to keep changes to modules small and incremental due to this, so we can test small changes in staging quickly and production is not sitting too long in 'planned' state.

Nonetheless, the aforementioned approach presented a challenge. With the modules directory shared globally across all AWS accounts, any modification to a single module would trigger a plan for every AWS account, irrespective of whether the account utilized the module. As plans for Terraform workspaces are evaluated sequentially, this led to significant latency between pushing a change and having it ready for 'apply'. Often, a module would only be used by a few accounts or by one account in multiple locations. Consequently, a considerable amount of time was wasted running these unnecessary plans, a problem that only intensified as we added more AWS accounts. One solution could be to increase parallelism on Terraform Cloud, allowing all workspace plans to run simultaneously. However, this option is prohibitively expensive and felt like an impractical brute-force method. Instead, we opted for a more technically appropriate resolution.

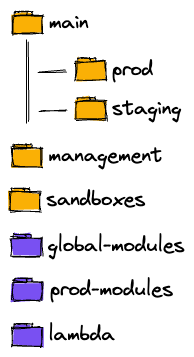

We opted to restructure the global 'modules' directory into a more granular configuration. Each account would possess its own local 'modules' directory, containing modules exclusively used within that account across various locations. A 'prod-modules' directory was designated for modules utilized by the 'production' account, as well as by accounts that closely replicate production, such as 'staging' and 'sandbox'. Lambda-related modules were allocated to a dedicated directory. Moreover, when we observed increased cross-account sharing of modules among a specific subset of accounts, we established a separate directory for those modules. Although we maintain a global 'modules' directory for functionality shared across all accounts, it has been significantly streamlined and primarily includes IAM-related components. As a result, modifying a module now triggers plans solely for the relevant accounts—typically one or two—enabling us to achieve the desired speed without resorting to purchasing costly parallelism upgrades.

VPC Execution

However, certain Terraform workloads necessitate execution within our VPC. For instance, modifying the EKS 'aws-auth' config map—a unique AWS characteristic for Kubernetes clusters—requires Terraform to access resources inside our VPC. Fulfilling this seemingly simple requirement for a small task demanded significant effort. To address this challenge, we employed our CI solution, GitHub Actions (GA), by setting up a self-hosted GitHub Actions runner within our VPC. A GA workflow would execute Terraform on this runner, enabling us to leverage Terraform Cloud for remote state storage in a distinct 'eks' workspace for each account.

While Terraform Cloud does provide an agent capable of operating within your VPC, which could potentially eliminate the need for GA in this scenario, it necessitates upgrading to the highest available Terraform Cloud tier. We deemed this not worth the expense compared to the alternative of using a GA self-hosted runner. By implementing GA's security model, which restricts execution based on a checksum of a workflow file and employs GitHub's group permissions, we were able to achieve comparable security assurances to those offered by Terraform Cloud.

Conclusion

Over time, we have increasingly adopted Terraform for the majority of infrastructure tools that support it, even extending to managing Terraform Cloud itself. Our Terraform code for Terraform Cloud streamlines the management of workspaces, permissions, terraform version upgrades, and other related tasks.

Using Terraform has been quite a journey. However, we feel that we have now achieved the right balance of performance, cost, and modularity for our Infrastructure-as-Code implementation.